Exam 1 Flashcards

statistics

scientific analysis of data in whose generation randomness/chance played some part

necessary to handle data since math isn’t enough because randomness is unavoidable in science

ex. how does the weight of a baby depend on its age? how does BP depend on genetics? Monty Hall Problem.

direction: probability is _____, while statistics is ______

prob is deductive. starts with if statement… then some prob calculation about data. ->

stat is inductive, starts with data and relevant prob calcs to make a statement about reality. <-

zig-zag relationship

probability notation

events: A, B, …

probability that event A will happen = Prob(A)

derived events

A u B: union, A or B or both

A n B: intersection, both

mutually exclusive events

F and G are mutually exclusive if they cannot happen in the same experiment. If F, G…H are mutually exclusive, P(F u G u … H) = Prob(F) + Prob(G)… + Prob(H).

independence

F and G are independent iff the probability of their intersection, Prob (F n G) = Prob(F) x Prob(G).

We often assume independence.

When F and G are independent, the conditional probability formula cancels out by filling in the prob of union equation. AKA F and G are independent if G having occured doesnt change the prob that F occurs.

If two events are mutually exclusive, they cannot be independent The oppositie may or may not be true.

conditional probabilities

the probability that F occurs given that G has occured

Prob (F | G) = Prob(F n G) / Prob(G)

discrete and continuous random variables

concept of the mind numbers

discrete RV: a conceptual and numerical quantity which in some future experiment will take on or other of a discrete set of values with known or unknown probabilities (only take one or other of a set of discrete values, usually 0, 1, 2, 3…)

continuous RV: can take any value in a continuous range of values RVs are written with upper case letters.

data

the observed values of RVs after the relevant experiment has been carried out. written with lower case letters.

presenting probabilities

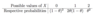

tableau with possible values of X and probabilities of X (probs sometimes known, sometimes unknown)

or graph (known)

parameters

some usually unknown numerical value, denoted with a greek letter

discrete RV notation

Prob( X = vi) is a shorthand for “the prob that, once the exp is carried out, the observed value x of X will be vi”

conditions for binomial distribution

1) we conduct a fixed number of trials

2) there are only two possible outcomes on each trial. We call these success and failure

3) the outcomes of the various trials are independent

4) the probability of success, θ, is the same on all trials RV of interest is the number of successes, X, in n trials

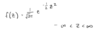

third method of presenting probability distribtuion

(n x) is the # of orderings in which there are x successes in the n trials = n! / (n-x)!x!

the second part is the probability of getting x successes in n trials in a specified order

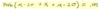

mean

NOT AN AVERAGE, a parameter, denoted μ, for binomal distribution, μ = nθ

it is the balance point (where areas under the curve are equal)

mean is a proberty of the being

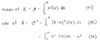

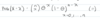

MUST KNOW LONG AND SHORT FORMULAS FOR MEAN

average

calculated from data, estimates the mean, denoted x̄

precision depends on sample size and the propoerties of the RV X̄, the concept of the mind average before we roll the die

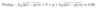

variance

always denoted σ2

it is a meausre of the spread outness of the probability distribution of X relative to the mean of X

for binomial distribution var of X = nθ (1 - θ)

important because the precision of x̄ as an estimate of μ depends on the variance of the RV X̄

MUST KNOW LONG AND SHORT FORMULAS FOR VARIANCE

complementary events

Ac is the event that A did not happen.

Prob (Ac) = 1 - Prob (A)