SOA Probability Flashcards

If A ⊂ B then (A n B)

(A n B) = A

Probability Generating Function Defined as PGF where

Px(t) =

Px(t) = E [tX]

E ( X ( X -1 ) ) = 2nd Moment of what Generating Function?

PGF - Probability Generating Function

E [X ∣ j ≤ X ≤ k] - continuous case

Integrate numerator from j to k

( ∫ x ⋅ fX (x) dx )

÷

( Pr ( j ≤ X ≤ k ) )

Percentile for Discrete Random Variables

Fx(πp) ≥ p

i.e the function at πp has to atleast be equal or greater than the percentile p

E [X | j ≤ X ≤ k] - Discrete Case

Sum numerator from x = j to k

( ∑ (x)( Pr [j ≤ X ≤ k] )

÷

( Pr [j ≤ X ≤ k] )

Percentile for Continous Random Variable

density function fX(πp) = p

Has to equal the percentile

Finding mode of discrete random variable

calculate probabilities of each possible value and choose the one that gives the largest probability

Finding mode of continuous random variable

take derivative of density function set it equal to 0 and solve for mode. (finding local maximum of function)

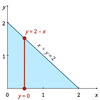

Cumulative Distribution Function (CDF) of a probability density function (PDF)

integrate from lowest value of X to the variable x itself

0 <f></f>

∫ f(t) dt

Chebyshev’s Inequality

Pr( |X-µ| ≥ kσ ) ≤ ( 1 / k2 )

How to break up the inequality of Chebyshev’s Equation

Pr( |X-µ| ≥ kσ )

=

Pr( (X-µ) ≥ kσ ) + Pr( (X-µ) ≤ -kσ )

Univariate Transformation CDF METHOD

From X to Y

1.) Given PDF of X find CDF of X

2.) Perform Transformation where FY( y ) = P( Y ≤ y ) with subsitution

3.) Restate CDF of Y using CDF of X ,

then subsitute CDF of X found in step 1 into CFD of Y

4.) Take Derivative of CDF of Y to find PDF of Y

Univariate Transformation PDF METHOD

From X to Y

1.) Get PDF of X if not given

2.) Find PDF of Y using the formula

fY( y ) = fX( [g-1( y )] ) • | (d/dy) g-1( y ) |

3.) Integrate PDF of Y to get CDF of Y if required

Discrete Uniform PMF

( 1 / b - a + 1)

Discrete Uniform E[X]

( a + b / 2 )

Discrete Uniform Var[X]

[( b - a + 1 )2 - 1]

÷

12

Bernoulli’s E[X]

p

Bernoulli’s Var[X]

pq

Bernoulli’s MGF

pet + q

Bernoull’s Variance Short-cut for Y = (a-b)X + b

(b - a)2• pq

Property of Expected One RV: E[c]=

E[c]=c, c = constant

Property of Expected One RV: E[c⋅g(X)]=

E[c⋅g(X)]= c ⋅ E[g(X)]

Property of Expected One RV: E[g1(X)+g2(X)+…+gk(X)] =

E[g1(X)+g2(X)+…+gk(X)]

=

E[g1(X)] + E[g2(X)]+ …+E[gk(X)]