Probability Flashcards

(111 cards)

Definition of sample space

In an experiment, the set of all possible outcomes is the sample space (S)

Definition of an event and written form

Any outcome in S (E), where E={x in S: x in E}

7 set operations

Union, intersection, compliment, communicative, associative, distributive, De Morgan’s Law

Definition of disjoint/ mutually exclusive events

Events A and B are mutually exclusive when their intersection is the empty set

Definition of pairwise mutually exclusive

For any two subsets of S (A1,A2,A3,…), their intersection is the empty set

Partition

If A1,A2,A3… are pairwise mutually exclusive and all these sets comprise a set S, then the set {A1,A2,A3,…} partitions S

Definition of sigma algebra

B, a collection of subsets in S is a sigma algebra if it satisfies the following properties 1) The empty set is contained in B 2) If A is in B then A^c is in B 3) If A1,A2,A3,… are in B then UAi are in B

What is the largest number of sets in a sigma algebra, B, with n sets?

2^n

Definition of probability

Given a sample space S with sigma algebra B, a probability function (or measure) is any assigned, real-valued function P with domain B that satisfies the Kolmogorov Axioms.

Kolmogorov Axioms

1) P(A)>=0, for any A in B 2) P(S)=1 3) For A1,A2,A3,… in B and are pairwise mutually exclusive then P(UAi)=SUM P(Ai)

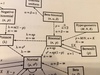

1) Gamma Distribution with different parameterizations

2) Expected values and variances of those distributions

3) Gamma funciton

4) Properties of Gamma funciton

5) MGF

1) Exponential Distribution with different Parameterizations

2) Different expected values and variances

3) MGF

1) Bernoulli Distribution

2) Expected value and variance

3) MGF

1) Geometric Distribution with different parameterizations

2) Expected values and variances

3) MGF (Also special rule to help solve this)

1) Poisson Distribution

2) Expected value and variance

3) MGF

1) Binomial Distribution

2) Expected value and variances

3) MGF

1) Beta Distribution

2) Expected value and variance

3) Beta Funciton

4) Expectation of nth term

1) Bivariate Normal

2) Conditional expectation

3) Conditional variance

1) Normal and standard normal Distributions

2) Expected values and variances

3) MGFs

1) Continuous Uniform Distribution

2) Expected value and variance

3) MGF

1) Multinomial Distribution

2) Expected value and variance

3) Multinomial Theorem

4) cov(x_i,x_j)

Bonferonni Inequality

Pr(A∩B)≥Pr(A)+Pr(B)-1

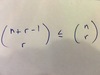

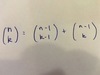

Table of ordered, non-ordered, with replacement, without replacement

Fundamental Theorem of Counting

For a job which consists of k tasks and tere are ni ways to accomplish each ith task then the job can be accomplished in (n1n2…nk) ways