Concurrency Flashcards

(15 cards)

Concurrency

A feature of some programming languages that allows a program to execute different parts of the code at the same time. In Java, this is achieved using Threads. Some examples include:

- Performing two parts of a calculation simultaneously

- Reading data from one source while writing to another

- Blocking / waiting for some input while performing some calculation

Processes

A process has a self-contained execution environment. A process generally has a complete, private set of basic run-time resources; in particular, each process has its own memory space.

Processes are often seen as synonymous with programs or applications. However, what the user sees as a single application may in fact be a set of cooperating processes. To facilitate communication between processes, most operating systems support Inter Process Communication (IPC) resources, such as pipes and sockets. IPC is used not just for communication between processes on the same system, but processes on different systems.

Most implementations of the Java virtual machine run as a single process. A Java application can create additional processes using a ProcessBuilder object.

Threads

Threads are separate lines of execution in the same process (running program) and therefore often referred to as lightweight processes. Both processes and threads provide an execution environment, but creating a new thread requires fewer resources than creating a new process.

Threads exist within a process — every process has at least one. Threads share the process’s resources, including memory and open files. This makes for efficient, but potentially problematic, communication.

Multithreaded execution is an essential feature of the Java platform. Every application has at least one thread — or several, if you count “system” threads that do things like memory management and signal handling. But from the application programmer’s point of view, you start with just one thread, called the main thread. This thread has the ability to create additional threads.

We use threads to solve embarrassingly parallel processes. However, care must be taken to wait for all the threads to finish!

Each thread has its own stack (local variables, method arguments) and program counter (address of next instruction to be executed). Threads in the same process share a heap (space in memory where objects are stored) and static variables.

In Java, thread classes can extend the java.lang.Thread class or implement the java.lang.Runnable interface. Typically, n threads each do 1 / n of the work, and then the sub-solutions are combined to create the solution.

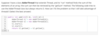

Thread non-determinism

This is when results cannot be determined, which is a potential problem that may be encountered when using threads to implement concurrency in Java. It’s not necessary a bug in code, since it’s out of the programmer’s control, but is the programmer’s responsibility to identify and safeguard against. Thread non-determinism occurs when multiple threads are used to perform different operations simultaneously on the same object. This can lead to non-deterministic results because the ordering of the execution of the threads is not guaranteed; threads are executing at the same time as controlled by the operating system.

For example, in the following code, we may expect t1 to execute first and then t2 but that cannot be guaranteed and happen in the inverse order:

Thread race conditions

When multiple threads operate on the same piece of data, this can lead to not only non-deterministic results, but also incorrect behavior if the results of operations are lost. The situations in which this occurs is known as a ”race condition.”

For example, in the following code, a race condition could occur if multiple threads call the deposit method on the same object. That’s because although this is only one Java instruction, it’s actually three operations: 1) reading the current balance 2) adding it to the amount and 3) storing the sum in a temporary piece of memory and then writing the new balance back to memory. If another thread interrupts those three operations and does something similar with the same piece of data, we may lose the result of one of those operations and that would negatively impact the final figure.

Thread synchronization

Synchronization, or mutual exclusion, can be used to avoid race conditions. This is done by making the read/write combination atomic, meaning that nothing else can interrupt it. We do that by enforcing a policy that only one thread at a time can execute that instruction. This can be achieved with mutual exclusion, when only one thread at a time is allowed to execute the critical section where a race condition could occur.

Synchronized method

A method for which only one thread at a time may execute it on an object. If a thread is executing a synchronized method on an object, any other thread that tries to execute a synchronized method on the same object will need to wait for the first thread to finish.

To make a method synchronized in Java you use the synchronized keyword:

Synchronized blocks

A more fine-grained approach to ensure mutual exclusion than synchronizing a whole method, by only locking a section of the function. This allows us to have a critical section that’s smaller than an entire method, and also to have multiple critical sections that can be run simultaneously. Each synchronized block relies on an object as its lock. If an object is locked, then no other thread can enter a synchronized block that is using it. But another thread can enter a synchronized block that’s using a different object.

This is illustrated in the following code:

Critical section

A piece of code where a race condition could occur.

Stack

The area of memory where local variables and method arguments are stored. A stack also holds its own program counter, which holds the address of the next instruction to execute.

Heap

The area of memory where objects are stored. It is also where static and global variables are stored.

What is meant by an “embarrassingly parallel problem?”

A problem for which the solution can so obviously be done concurrently, or in parallel, that it’s kind of embarrassing! One example of this is summing the elements of an array. There are a couple of ways we can solve this problem using concurrency, but one simple approach is to use multiple threads. In this case each thread does 1/n of the work and then the threads are combined to create the solution. For example, if two threads are created then the first thread calculates the sum of the first half of the array, sum1, and the second thread simultaneously calculates the sum of the second half, sum2. This will cut down the amount of operations by half. You just need to add their sums at the end so the total becomes sum1 + sum2.

The following code shows how this could implemented:

What purpose does the .join( ) method serve?

It ensures that the program waits for the threads to finish before using the results so that the correct results are received!

This problem occurs because the code doesn’t wait for the “run” methods to finish and calls getSum( ) right away. In order to fix the problem you must put the join statements after the start statements so that the code waits for each thread to finish before executing line 6:

t1.join( );

t2.join( );

What might the following code print?

1 or 2 given the race condition